Deploying a Scalable Machine Learning Service on Kubernetes

In today’s rapidly evolving tech landscape, deploying machine learning models efficiently and reliably in production environments is a critical skill. This tutorial series provides a hands-on, end-to-end guide for deploying a machine learning service on Kubernetes, designed specifically for beginners eager to master MLOps practices.

Reading_time: 15 min

Tags: [MLOps, Kubernetes, Machine Learning, FastAPI, Docker, Podman, Scikit-Learn, Sentiment Analysis, API Deployment, Model Serving, Auto-scaling, HPA, Prometheus, Monitoring, Containerization, Dev]

- A Comprehensive Tutorial on Deploying a Scalable Machine Learning Service on Kubernetes

- Overview of the Tutorial

- What You’ll Learn

- Technical Stack

- Topics Covered

- Introduction & Project Overview

- Setting Up Podman & Kind

- Creating a Kubernetes Cluster

- Deploying Persistent Storage

- Setting Up ConfigMap

- Deploying the ML Application

- Exposing the Service & Auto-Scaling

- Setting Up Prometheus for Monitoring

- Testing the API & Metrics

- Debugging & Troubleshooting

- Conclusion & Next Steps

- Video Tutorial Series

- Resources Used

- Conclusion

A Comprehensive Tutorial on Deploying a Scalable Machine Learning Service on Kubernetes

GitHub Repository: kubernetes-ml-deployment

Overview of the Tutorial

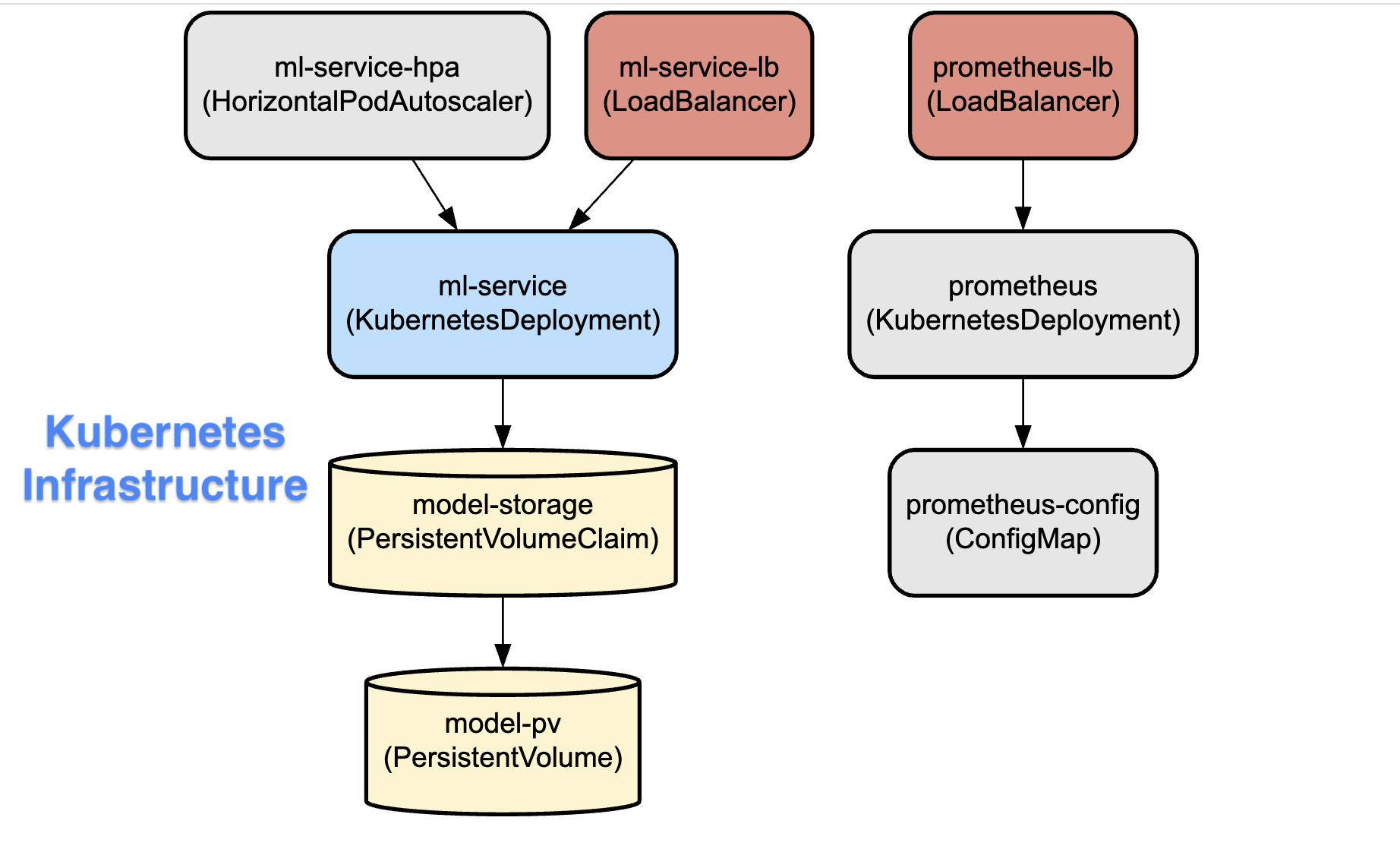

This tutorial covers the entire pipeline of deploying a Sentiment Analysis model — from model development to scalable, monitored production deployment on Kubernetes.

Here’s a glimpse of what you will build and learn:

- Sentiment Analysis Model developed with Scikit-Learn for natural language processing tasks.

- A FastAPI-based REST API serving the model, enabling easy, low-latency inference.

- Containerization using Docker or Podman to package the application and dependencies.

- Kubernetes Deployment, including setup of clusters, persistent storage for model artifacts, and auto-scaling using the Horizontal Pod Autoscaler (HPA).

- Real-time monitoring using Prometheus to track application health and performance metrics.

This project equips you with practical MLOps skills to deploy and maintain machine learning applications at scale.

What You’ll Learn

- Building and training a sentiment analysis model with Scikit-Learn.

- Designing a RESTful API using FastAPI for seamless model inference.

- Containerizing ML services with Docker or Podman for portability.

- Creating and managing Kubernetes clusters with Kind (Kubernetes in Docker).

- Configuring Persistent Volumes (PV) and Persistent Volume Claims (PVC) for reliable storage.

- Managing application configurations with Kubernetes ConfigMaps.

- Implementing Horizontal Pod Autoscaling (HPA) for dynamic load management.

- Setting up Prometheus monitoring for real-time insights and alerts.

- Testing APIs and Prometheus metrics along with common debugging practices.

Technical Stack

- Machine Learning: Scikit-Learn

- API Framework: FastAPI

- Containerization: Docker, Podman

- Orchestration: Kubernetes, Kind

- Storage: Persistent Volumes in Kubernetes

- Auto-scaling: Horizontal Pod Autoscaler (HPA)

- Monitoring: Prometheus

Topics Covered

Introduction & Project Overview

- Understand the scope and architecture of the deployment pipeline.

Setting Up Podman & Kind

- Install and configure Podman and Kind for local Kubernetes cluster management.

Creating a Kubernetes Cluster

- Initialize and configure your Kubernetes environment.

Deploying Persistent Storage

- Configure Persistent Volumes and Claims for storing model data.

Setting Up ConfigMap

- Manage application settings with Kubernetes ConfigMaps.

Deploying the ML Application

- Containerize and deploy your ML model API on Kubernetes.

Exposing the Service & Auto-Scaling

- Make your service accessible and configure HPA to handle varying workloads.

Setting Up Prometheus for Monitoring

- Integrate Prometheus to monitor system and application metrics.

Testing the API & Metrics

- Validate the deployed API and monitor Prometheus outputs.

Debugging & Troubleshooting

- Identify and resolve common issues encountered in production deployments.

Conclusion & Next Steps

- Summarize learnings and suggest paths for further enhancement.

Video Tutorial Series

Follow along with my step-by-step tutorial series on Hands-On End-to-End ML Model Deployment on Kubernetes - Auto-Scaling & Monitoring playlist:

- Part 1: Introduction & Project Setup

- Part 2: Setup Podman and Install Kind

Resources Used

- GitHub Repository: kubernetes-ml-deployment

- Docker Hub Image:

abonia/ml-tutorial - Podman Installation Guide

- Docker Desktop Download

- Kind (Kubernetes in Docker) Quick Start

- Kubernetes Official Documentation

- Prometheus Documentation

- FastAPI Documentation

- Scikit-Learn Supervised Learning

Conclusion

Mastering the deployment of machine learning models on Kubernetes unlocks the potential to build robust, scalable, and maintainable AI-powered applications. Whether you’re a beginner stepping into MLOps or a developer aiming to enhance your deployment pipeline, this tutorial offers practical insights to elevate your skills.

Stay connected for updates, more tutorials, and guides by subscribing to my newsletter and following on LinkedIn and GitHub.

Thanks for Reading!

Connect with me on Linkedin

Find me on Github

Visit my technical channel on Youtube